Scrape lists of URLs

Sometime, we want to scrape multiple pages with simliar layout, for example:

each list page's URL follows the same format, and we can construct a list of URLs for all list pages easily to scrape for example:

https://www.xxx.com/result?pageNo=1

https://www.xxx.com/result?pageNo=2

https://www.xxx.com/result?pageNo=3

https://www.xxx.com/result?pageNo=4

have a list of product id, and we can construct a list of URL for all these products for example:

https://www.xxx.com/list?productId=A001

https://www.yyy.com/list?productId=A002

https://www.yyy.com/list?productId=A003

https://www.yyy.com/list?productId=A004

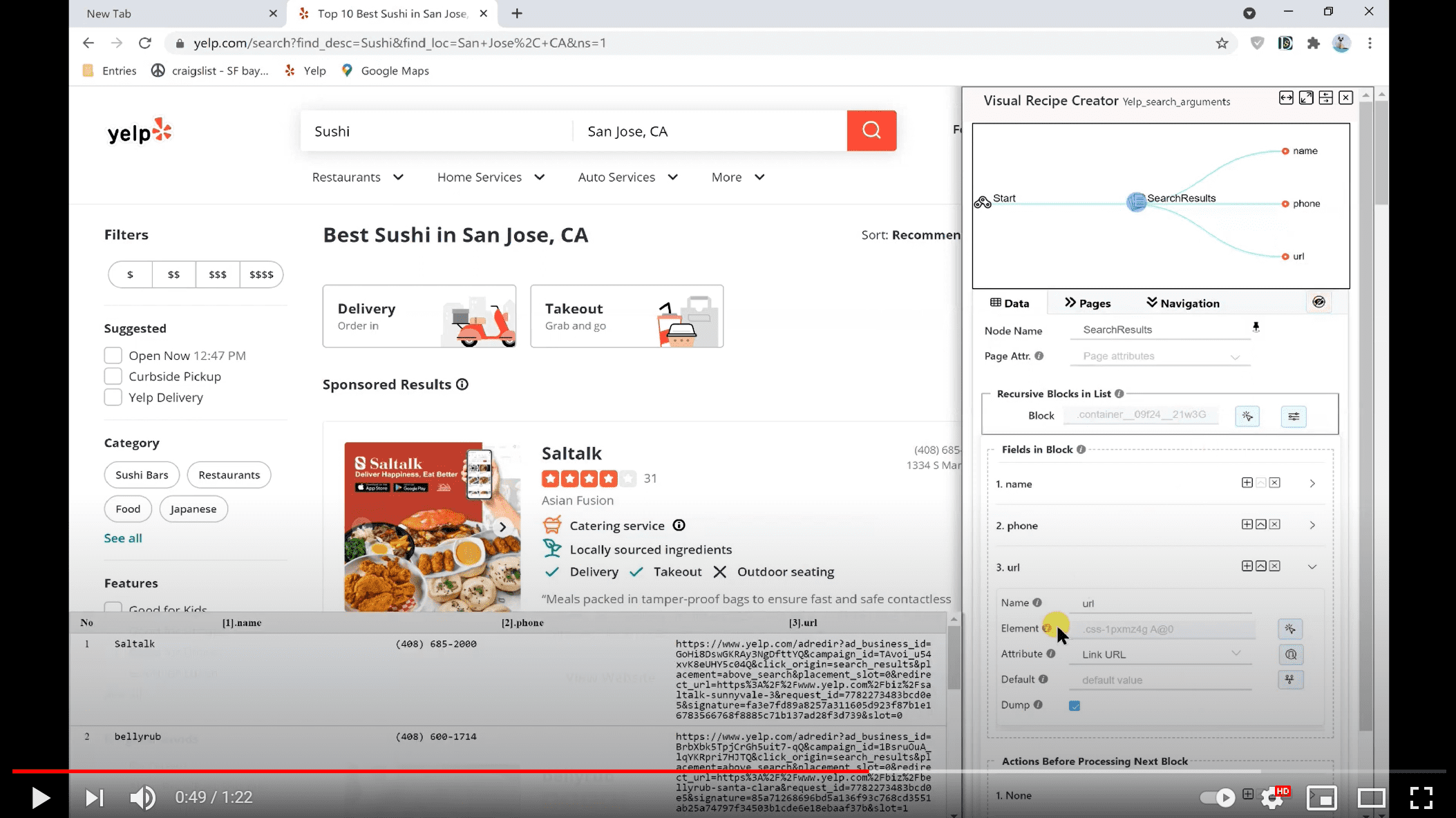

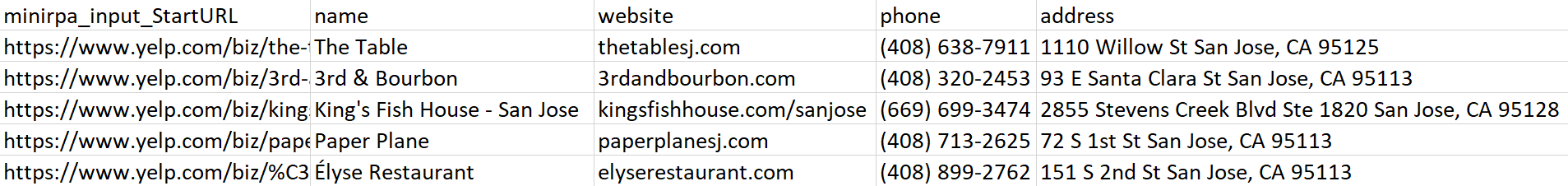

We still take Yelp for example here. In the section Detail page scraping, we have defined a recipe to scrape Yelp's restuarnat. Now we have a list of such Yelp restaurants to scrape:

https://www.yelp.com/biz/the-table-san-jose

https://www.yelp.com/biz/3rd-and-bourbon-san-jose-2

https://www.yelp.com/biz/kings-fish-house-san-jose-san-jose

https://www.yelp.com/biz/paper-plane-san-jose-2

https://www.yelp.com/biz/%C3%A9lyse-restaurant-san-jose-2

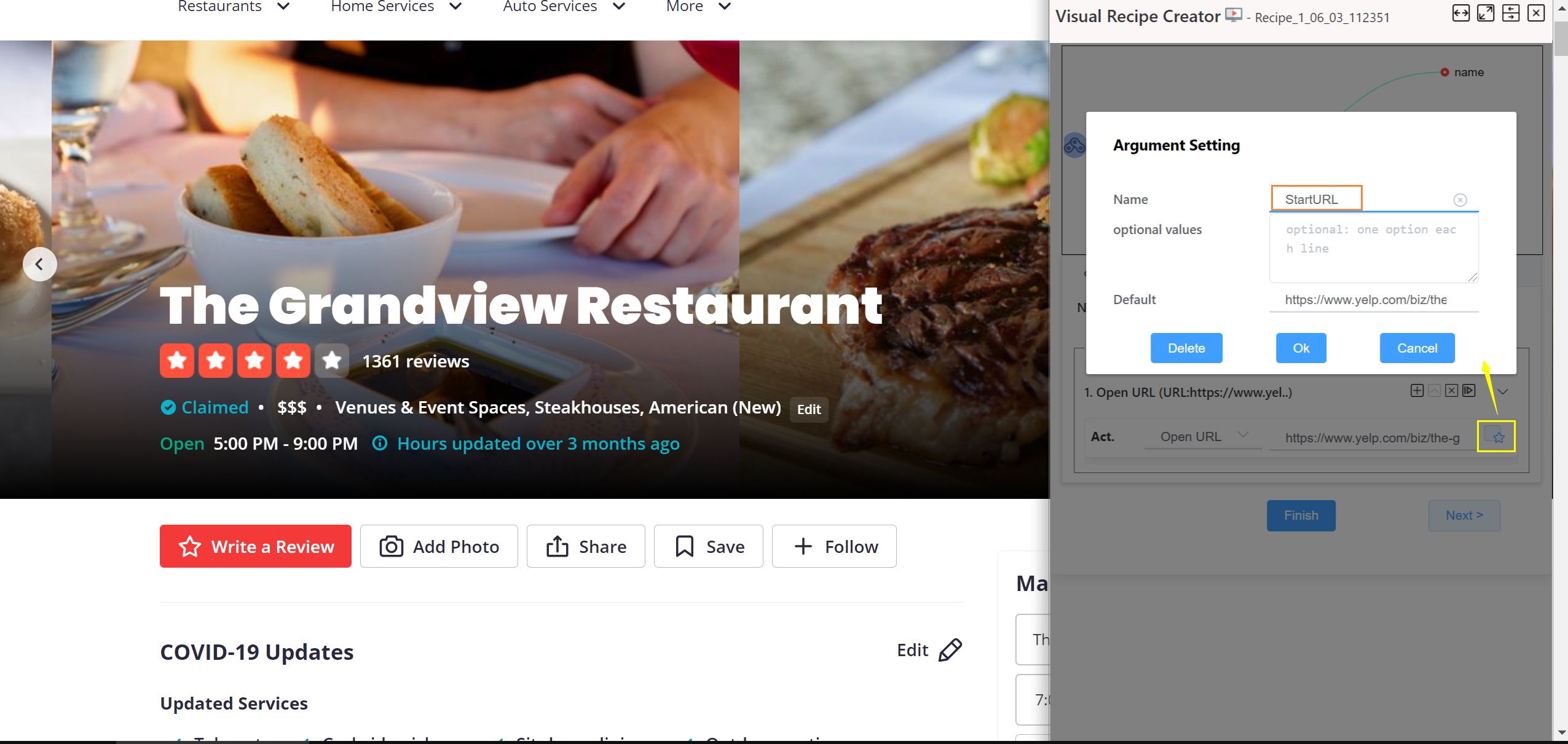

To make the reicpe to scrape the list of URLs, what we need to do it just open the recipe, and add an argument declaration on the openURL action.

Clicking the star icon ( ![]() ) at the right side of openURL action, Argument Setting dialog is popped up. Each argument is an entry. NDS enables user to feed values to the recipe via such entries when starting the recipe.

) at the right side of openURL action, Argument Setting dialog is popped up. Each argument is an entry. NDS enables user to feed values to the recipe via such entries when starting the recipe.

On the Argument Setting dialog:

- Name: set a name for the argument (required). The name will be shown on the recipe start UI

- Optional Values: define all acceptable value for the argument (optional)

- Default: the default value for the arguemnt (required). If 'Optional Values' is defined, the default value must be one of them.

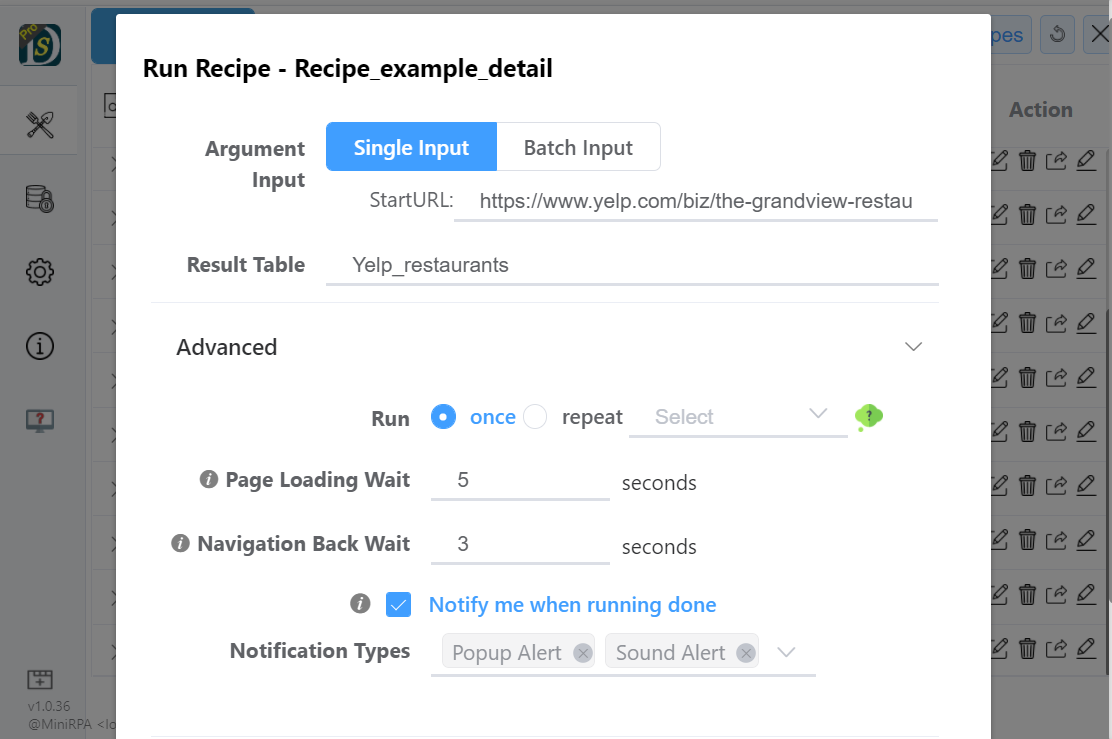

Now save the recipe, and start the recipe on popup window.

Here the start UI has an Argument Input setting. It has two options here:

| Argument input type | UI |

|---|---|

| Single Input |  |

| Batch Input |  |

- Single Input: the recipe's argument(s) are listed and each with its default value. You can change the value(s) before starting the recipe.

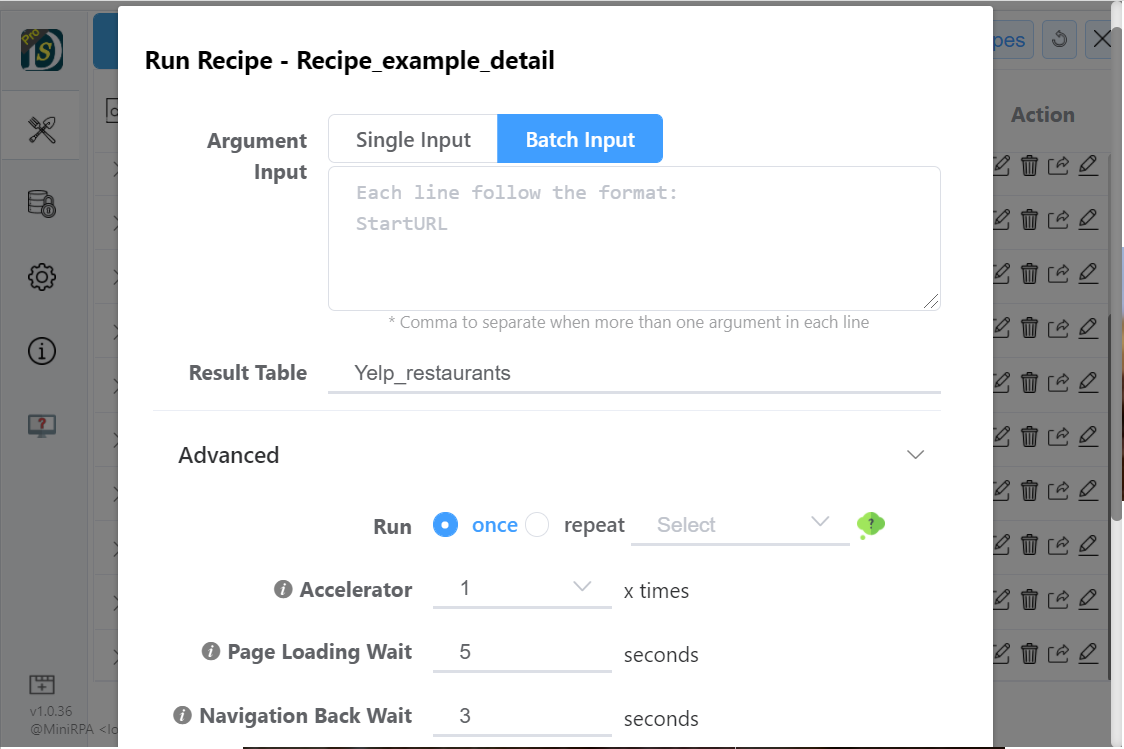

- Batch Input: the text box accepts multiple argument lines. Each line consists of the values of arguments.

Here we select 'Batch Input' and copy the whole restaurant url list into the box. NDS will read each line, feed it to the recipe and execute the recipe then. During scraping, NDS will open a new tab for each URL.

The scraping result for each URL is stored in the same output data table.

Video demo:

Up to now we have learned how to use argument(s) to do url list scraping. Next we want to use arguments to make scraping more dynamic.